訓練設備最佳效益分析模型

訓練設備最佳效益分析模型

# 訓練設備最佳效益分析模型

# 1. 說明

- 依據設備最佳效益的數據,訓練出適用的分析模型並儲存模型權重。

- 訓練完成後,向FastWeb發送訊息,更新設備最佳效益訓練記錄。

- 發送訊息至FastWeb訊息服務,提示模型訓練完成。

# 2. 設計Python程式

設計的Python示例程式如下:

# 模型訓練

import torch

import torch.nn as nn

import torch.optim as optim

import json

#import websockets

import requests

#from aiohttp import web

#from pydantic import BaseModel

import csv

import random

import datetime

#import asyncio

import os

import logging

from logging.handlers import TimedRotatingFileHandler

import sys

#import gc

fastweb_url = 'http://192.168.0.201:8803'

# 檢測目錄是否存在,如不存在則建立新目錄

def create_directory_if_not_exists(directory_path):

if not os.path.exists(directory_path):

os.makedirs(directory_path)

#logger.info(f"目錄 '{directory_path}' 建立成功!")

# 定義神經網路模型

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.hidden_layers = nn.Sequential(

nn.Linear(4, 100),

nn.ReLU()

)

for _ in range(9):

self.hidden_layers.add_module(f'hidden_{_+1}', nn.Linear(100, 100))

self.hidden_layers.add_module(f'relu_{_+1}', nn.ReLU())

self.output_layer = nn.Linear(100, 1)

def forward(self, x):

x = self.hidden_layers(x)

x = self.output_layer(x)

return x.squeeze(-1)

# 定義損失函式

def huber_loss(input, target, delta):

residual = torch.abs(input - target)

condition = residual < delta

loss = torch.where(condition, 0.5 * residual ** 2, delta * (residual - 0.5 * delta))

return loss.mean()

# 檢查是否可用GPU加速

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

#model_path = "model/model.pt"

# 載入訓練好的模型

model = NeuralNetwork().to(device)

# 將數據集分割為訓練集和驗證集

def split_dataset(data, split_ratio):

random.shuffle(data)

split_index = int(len(data) * split_ratio)

train_data = data[:split_index]

validate_data = data[split_index:]

return train_data, validate_data

# 將數據儲存為CSV檔案

def save_dataset_to_csv(filename, data):

with open(filename, 'w', newline='') as csvfile:

writer = csv.writer(csvfile)

writer.writerow(['kp', 'ki', 'kd', 'setpoint', 'sumWeight'])

writer.writerows(data)

# 載入數據集

def load_dataset_from_csv(filename):

data = []

with open(filename, 'r') as csvfile:

reader = csv.reader(csvfile)

next(reader) # 跳過標題行

for row in reader:

data.append([float(value) for value in row])

return data

# 訓練數據

# 發起請求獲取數據集

def main():

# 配置日誌

logger = logging.getLogger('__dcc_pid_train__')

if logger.hasHandlers():

logger.handlers.clear()

# 配置日誌

log_filename = 'log/dcc_pid_train.log'

log_formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

log_handler = TimedRotatingFileHandler(log_filename, when="D", interval=1, backupCount=7)

log_handler.suffix = "%Y-%m-%d.log"

log_handler.encoding = "utf-8"

log_handler.setFormatter(log_formatter)

# 建立日誌記錄器

logger = logging.getLogger('__dcc_pid_train__')

logger.setLevel(logging.DEBUG)

logger.addHandler(log_handler)

# 獲取目前日期和時間

current_datetime = datetime.datetime.now()

# 根據日期和時間產生自動編號

# 這裡使用年月日時分秒作為編號,例如:20230515120000

auto_number = current_datetime.strftime("%Y%m%d%H%M%S")

create_directory_if_not_exists('log/')

create_directory_if_not_exists('data/')

create_directory_if_not_exists('model/')

try:

params = json.loads(input_value.value)

# params = {'username':'admin','tag':'0','guid':'333','eqname':'esp32','periodid':'1','lr':0.001, 'weight_decay':0, 'lambda':0,'num_epochs':1000, 'batch_size':32}

url = fastweb_url

guid = params["guid"]

# 重新定義查詢參數

query_params = {

'restapi': 'pid_energy_efficiency',

'eqname': params["eqname"],

'periodid': params["periodid"]

}

response = requests.get(url, params=query_params)

data = response.json()

dataset = []

for item in data["tabledata"]:

dataset.append([item['kp'], item['ki'], item['kd'], item['setpoint'], item['sumWeight']])

# 將數據集分割為訓練集和驗證集

train_data, validate_data = split_dataset(dataset, split_ratio=0.9)

#logger.info('split data')

# 儲存訓練集和驗證集為CSV檔案

save_dataset_to_csv(f'data/train_{params["eqname"]}_{auto_number}.csv', train_data)

save_dataset_to_csv(f'data/validate_{params["eqname"]}_{auto_number}.csv', validate_data)

# 載入訓練集和驗證集數據

train_data = load_dataset_from_csv(f'data/train_{params["eqname"]}_{auto_number}.csv')

validate_data = load_dataset_from_csv(f'data/validate_{params["eqname"]}_{auto_number}.csv')

# 轉換數據為張量並移至GPU

train_X = torch.tensor([row[:-1] for row in train_data], dtype=torch.float32).to(device)

train_y = torch.tensor([row[-1] for row in train_data], dtype=torch.float32).to(device)

validate_X = torch.tensor([row[:-1] for row in validate_data], dtype=torch.float32).to(device)

validate_y = torch.tensor([row[-1] for row in validate_data], dtype=torch.float32).to(device)

# 建立神經網路實體

model_train = NeuralNetwork()

# 檢查是否可用GPU加速

model_train.to(device)

# 設定訓練參數

num_epochs = params['num_epochs']

batch_size = params['batch_size']

# 設定早停參數

early_stop_threshold = 0.001 # 閾值,當驗證集損失上升超過該閾值時觸發早停

early_stop_patience = 5 # 連續幾個週期損失上升時觸發早停

# 進行訓練

best_validate_loss = float('inf')

patience_counter = 0 # 計數器,記錄連續上升的週期數

#logger.info(params['lr'])

# 定義優化器

#optimizer = optim.Adam(model_train.parameters(), lr=params['lr'])

optimizer = optim.SGD(model_train.parameters(), lr=params['lr'])

criterion = huber_loss

# regularizer = torch.nn.L1Loss(reduction='mean')

# 進行訓練

for epoch in range(num_epochs):

for i in range(0, train_X.shape[0], batch_size):

# 獲取目前批次數據

inputs = train_X[i:i+batch_size]

targets = train_y[i:i+batch_size]

# 前向傳播

outputs = model_train(inputs)

loss = criterion(outputs, targets, 0.1)

# 反向傳播和優化

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 每隔一段時間輸出目前的訓練集損失值和驗證集損失值

if (epoch+1) % 100 == 0:

train_loss = criterion(model_train(train_X), train_y, 0.1)

validate_loss = criterion(model_train(validate_X), validate_y, 0.1)

logger.info(f'Epoch [{epoch+1}/{num_epochs}], Train Loss: {train_loss.item()}, Validate Loss: {validate_loss.item()}')

# 檢查驗證集損失是否上升超過閾值

if validate_loss > best_validate_loss + early_stop_threshold:

patience_counter += 1

else:

patience_counter = 0

best_validate_loss = validate_loss

# 檢查是否觸發早停

if patience_counter >= early_stop_patience:

logger.info('Early stopping triggered. Training stopped.')

break

# 檢查最終訓練集損失值和驗證集損失值

final_train_loss = criterion(model_train(train_X), train_y, 0.1)

final_validate_loss = criterion(model_train(validate_X), validate_y, 0.1)

logger.info(f'Final Train Loss: {final_train_loss.item()}, Final Validate Loss: {final_validate_loss.item()}')

ismodel = False

if final_validate_loss.item() < 1:

# 儲存模型

model_path = f"model/{params['eqname']}_{auto_number}.pt" # 儲存的模型檔案路徑

torch.save(model_train.state_dict(), model_path)

logger.info("模型已儲存")

ismodel = True

# 更新訓練記錄

isfinish = True

url = fastweb_url + "/?restapi=pid_update_trainlog"

data = {"guid":guid,"lr":params['lr'],"num_epochs":params['num_epochs'],"batch_size":params['batch_size'],"train_loss":final_train_loss.item(),"validate_loss":final_validate_loss.item(),"isfinish":isfinish,"ismodel":ismodel,"model_path":model_path}

data = json.dumps(data)

logger.info(data)

response = requests.post(url, data=data)

if response.status_code == 200:

logger.info("請求成功")

# 可以使用http呼叫FastWeb,提示已經完成相關工作

# 刪除產生的臨時檔案

os.remove(f'data/train_{params["eqname"]}_{auto_number}.csv')

os.remove(f'data/validate_{params["eqname"]}_{auto_number}.csv')

# 呼叫http 提示已訓練完成

data = json.dumps({"username":params['username'],"action":"callback","tag":params['tag'], \

"data":{"callbackcomponent":"WebHomeFrame","callbackeventname":"update", \

"callbackparams":[{"paramname":"messagetype","paramvalue":"success"},{"paramname":"title","paramvalue":"success"},{"paramname":"message","paramvalue":"設備最佳運轉效益模型訓練已完成"}]}})

input_value.value = '訓練已完成'

url = fastweb_url + "/?restapi=sendwsmsg"

response = requests.post(url, data=data)

if response.status_code == 200:

logger.info("請求成功")

# 保留的系統變數列表(如 __name__ 等)

keep = {'__name__', '__doc__', '__package__', '__loader__', '__spec__', '__builtins__'}

# 遍歷全域性變數並刪除非保留項

for name in list(globals().keys()):

if name not in keep:

del globals()[name]

except Exception as e:

logger.error(e)

if __name__ == "__main__":

main()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

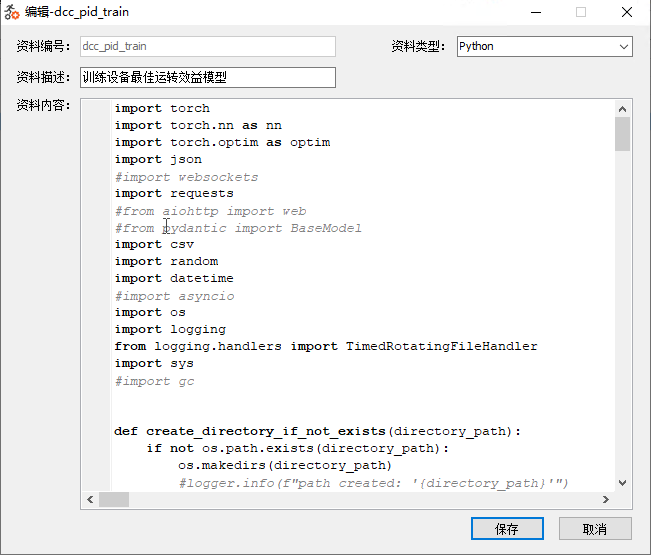

將上述程式儲存為預設資料。按照下述樣式進行儲存。

上述程式中定義的參數說明如下:

- 參數名稱:

input_value。

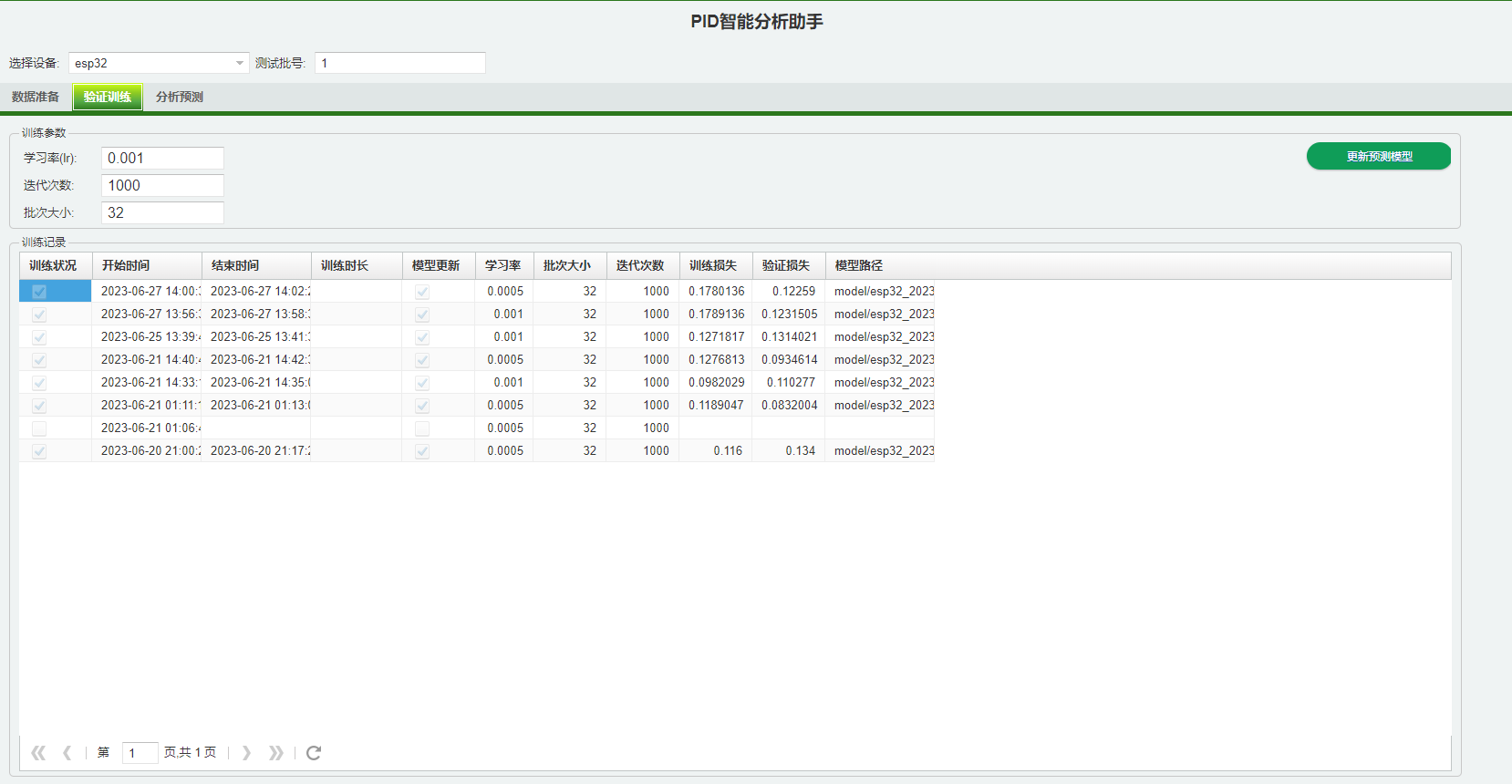

# 3. 呼叫執行

可以使用FastWeb 數控中心-設備最佳運轉效益-PID智能分析助手 (opens new window)來呼叫啟用模型分析的Python指令碼。設定好呼叫taskrunner的地址,在驗證訓練界面點選[更新預測模型],以啟用模型訓練的過程。訓練完成後,可以看到此次訓練的記錄,以及相關的訓練結果。